Data Center

Highest efficiency,

highest power density

Over 40% of data center costs relate to power (electricity and cooling). As data center traffic accelerates, silicon’s ability to process power effectively and efficiently hits ‘physical material’ roadblocks.

As a result, data center architects are turning to wide bandgap (WBG) gallium nitride (GaN) and silicon carbide (SiC) semiconductors to help them optimize performance while keeping energy consumption as low as possible, even as rack powers rise.

The proven reliability of mass production, highly-integrated GaNFast ICs and GeneSiC power devices enables major improvements in efficiency by up to 10%, saving up to $1.9B per year.

App Notes, Articles

High-Density Power for the AI Revolution

Read More

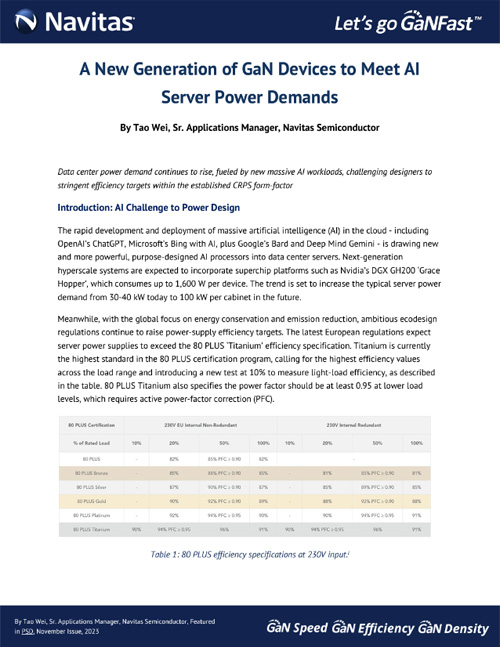

A New Generation of GaN Devices to Meet AI Server Power Demands

Read More

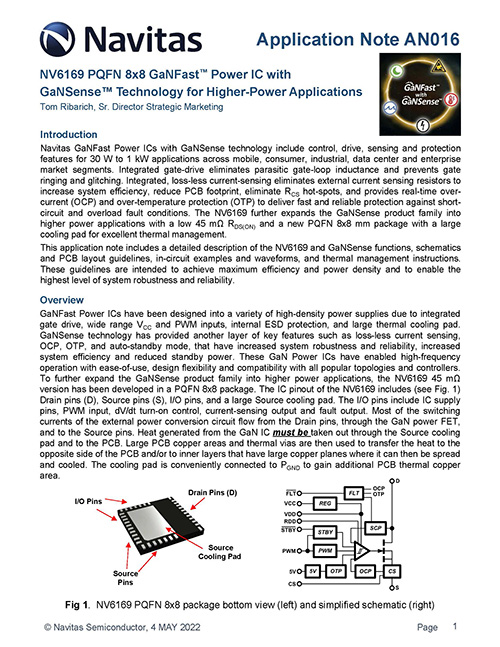

AN016: NV6169 PQFN 8×8 GaNFast™ Power IC with GaNSense™ Technology for Higher-Power Applications

Read More